Designed with user feedback in mind

Designed with user feedback in mind

Designed with user feedback in mind

Hardware

Hardware

//004

//004

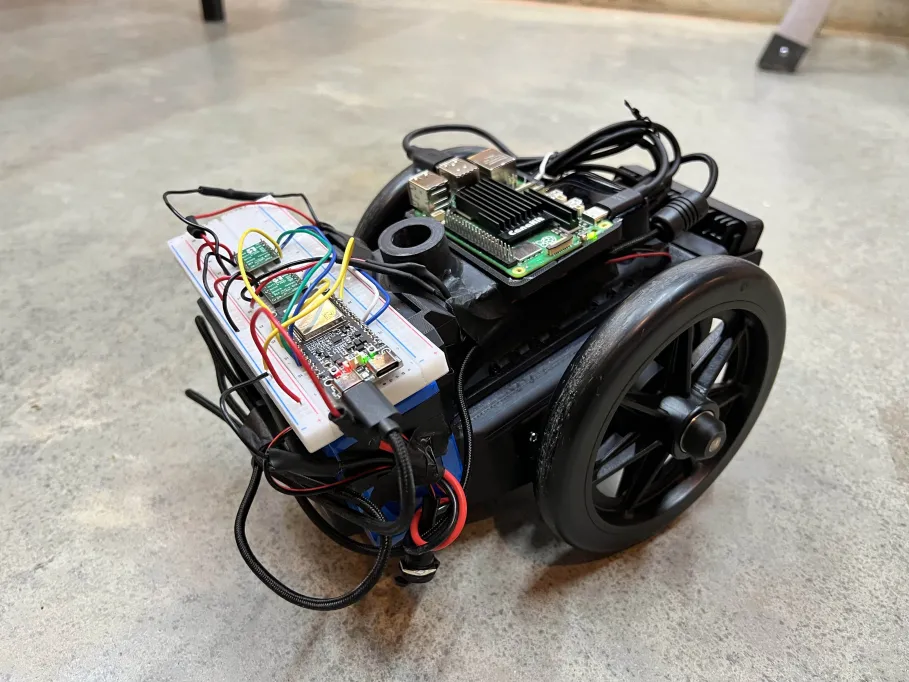

Navis operates on a dual-node kinetic architecture, synchronizing an autonomous aerial scout with a ground-based haptic engine to physically translate flight paths into human guidance. Embedded depth arrays and distributed micro-processing units fuse distinct sensory streams into a singular, reactive mobility mesh. This hardware symbiosis allows the system to extend human perception beyond physical reach, effectively turning the surrounding environment into a navigable data stream.

Navis operates on a dual-node kinetic architecture, synchronizing an autonomous aerial scout with a ground-based haptic engine to physically translate flight paths into human guidance. Embedded depth arrays and distributed micro-processing units fuse distinct sensory streams into a singular, reactive mobility mesh. This hardware symbiosis allows the system to extend human perception beyond physical reach, effectively turning the surrounding environment into a navigable data stream.

Software

Software

//004

//004

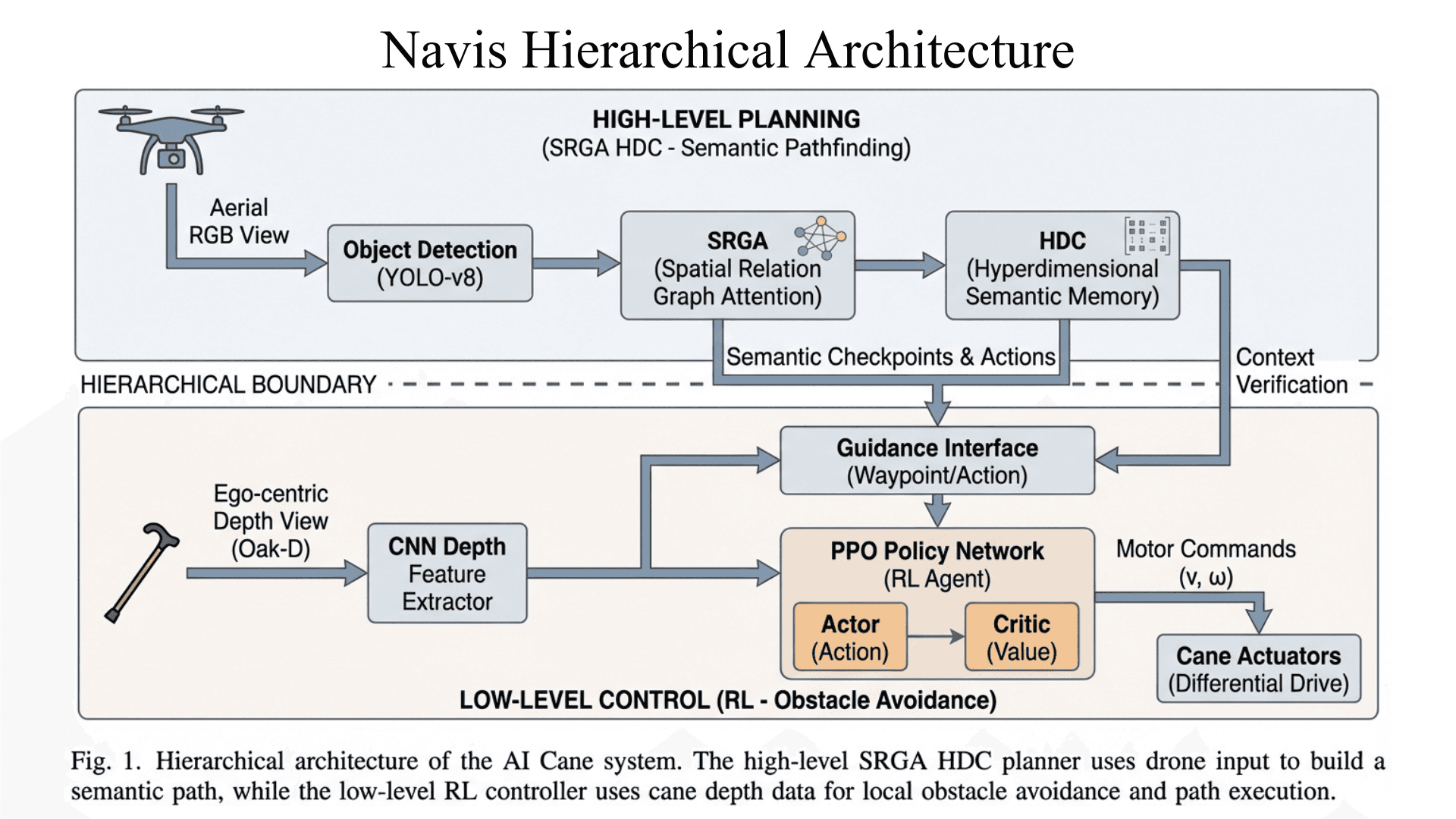

At the core of Navis lies SRGA-HDC, a proprietary architecture that transcends traditional computer vision by synthesizing spatial relationships through hyperdimensional vector fields. Rather than relying on fragile pixel-matching, our algorithms construct a viewpoint-invariant semantic graph, allowing aerial intelligence to seamlessly interpret ground-level reality despite radical perspective shifts. Navigation is governed by a stochastic reinforcement learning policy, trained over thousands of zero-shot episodes to master complex physics before ever entering the real world. This computational framework bridges the gap between disparate sensor perspectives, translating raw environmental entropy into a coherent pathing logic in milliseconds. The result is a self-correcting neural guidance system that understands destination intent rather than just simple obstacle avoidance.

At the core of Navis lies SRGA-HDC, a proprietary architecture that transcends traditional computer vision by synthesizing spatial relationships through hyperdimensional vector fields. Rather than relying on fragile pixel-matching, our algorithms construct a viewpoint-invariant semantic graph, allowing aerial intelligence to seamlessly interpret ground-level reality despite radical perspective shifts. Navigation is governed by a stochastic reinforcement learning policy, trained over thousands of zero-shot episodes to master complex physics before ever entering the real world. This computational framework bridges the gap between disparate sensor perspectives, translating raw environmental entropy into a coherent pathing logic in milliseconds. The result is a self-correcting neural guidance system that understands destination intent rather than just simple obstacle avoidance.

Team

Team

//004

//004

"If you want to go fast, go alone. If you want to go far, go together"

"If you want to go fast, go alone. If you want to go far, go together"

Kevin Xia

Software

Kevin Xia

Software

Kevin Xia

Software